Complete Guide: From Ollama Installation to Mobile App Integration

Overview

This technical guide covers the complete process of setting up Ollama, a local LLM server, including external access configuration and mobile app integration using MyOllama.

1. Introduction to Ollama

Ollama is an open-source project enabling local execution of various LLMs (Llama, Mistral, Gemma, etc.) on personal computers. It ensures privacy through local execution and offers API accessibility for various implementations.

2. Installation Guide

macOS

brew install ollama

Linux (Ubuntu/Debian)

curl -fsSL https://ollama.com/install.sh | sh

Windows

- Download Windows installer from ollama.com/download

- Execute installer

- Follow installation wizard

3. Model Management

Basic Commands

# Download model ollama pull llama2 # Run model ollama run llama2 # List models ollama list

4. External Access Configuration

macOS Configuration

# Edit config file echo "OLLAMA_HOST=0.0.0.0" > ~/.ollama/config # Restart service ollama serve

Linux (Ubuntu) Configuration

# Create service file sudo tee /etc/systemd/system/ollama.service << EOF [Unit] Description=Ollama Service After=network.target [Service] ExecStart=/usr/local/bin/ollama serve Environment="OLLAMA_HOST=0.0.0.0" Restart=always User=root [Install] WantedBy=multi-user.target EOF # Enable and start service sudo systemctl daemon-reload sudo systemctl enable ollama sudo systemctl start ollama

Windows Configuration

# Set environment variable setx OLLAMA_HOST 0.0.0.0 # Restart service ollama.exe stop ollama.exe start

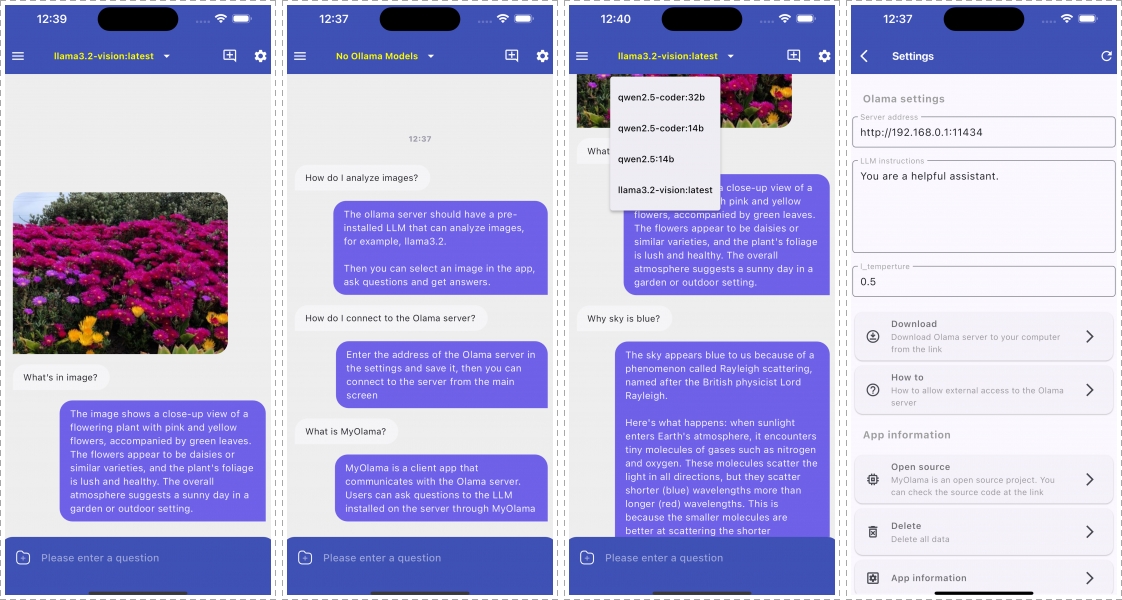

5. MyOllama Integration

Installation

- Install from App Store – https://apps.apple.com/us/app/my-ollama/id6738298481

- Build from Github – https://github.com/bipark/my_ollama_app

Configuration Steps

- Launch MyOllama

- Navigate to Settings

- Enter Ollama server URL:

http://<your-ip>:11434 - Test connection

Usage

- Select model from available list

- Initialize new conversation

- Input prompt to begin interaction

6. Troubleshooting Guide

Common Issues and Solutions

Connection Issues

# Check service status systemctl status ollama # Linux sudo lsof -i :11434 # macOS # Verify firewall settings sudo ufw status # Linux sudo pfctl -s rules # macOS

Model Loading Failures

# Clear model cache rm -rf ~/.ollama/models/* # Verify system resources free -h # Memory df -h # Disk space

Security Considerations

Network Security

- Implement firewall rules

# Ubuntu UFW example sudo ufw allow from 192.168.1.0/24 to any port 11434

Best Practices

- Use VPN for remote access

- Regular system updates

- Monitor access logs

- Implement authentication if needed

Technical Specifications

- Default Port: 11434

- Protocol: HTTP

- API Format: REST

- Response Format: JSON

Additional Resources

Version Information

- Document Version: 1.0.6

- Ollama Version: Latest

- Last Updated: 2024

For technical support or additional information, please create an issue in the respective GitHub repository or consult the official documentation.